8 Techniques for Dealing with Non-Normal, Categorical, and Ordinal Data

Chris Bailey, PhD, CSCS, RSCC

Most of the types of analysis discussed and demonstrated up to this point of the book belong to a category called parametric assessments. This means that they are assuming a specific parameter, one of which is that the data are normally distributed. As discussed earlier, not all data are normally distributed. This is problematic for parametric assessments that assume data normality. When working with data that are not normally distributed, a nonparametric test should be run in lieu of a parametric method. Fortunately, there are nonparametric alternatives for many of the assessment methods that have been previously discussed. This chapter will highlight those along with how and when to use them.

Chapter Learning Objectives

- Differentiate between parametric and nonparametric analysis methods

- Gain an understanding of several nonparametric statistical analysis methods and when to use each

- Gain experience using and interpreting nonparametric analyses methods with provided datasets

Parametric and Nonparametric Statistics

Parametric

As mentioned above, parametric statistical methods require that data are normally distributed. This is because parametric assessments make assumptions about the normality of the data included in the analysis. Whenever we want to run any statistical test, any assumptions of that test should be checked. If a distribution or distributions aren't normally distributed, parametric tests should not be run.[1][2] Parametric tests include any that make this assumption of normality, which includes many that have been discussed before (i.e. t tests, ANOVAs, correlation, and most types of regression). Along with normality, parametric assessments also assume that the data are numerical, and specifically must be scale or continuous. Nominal and ordinal data cannot be used for parametric statistical assessments. One caveat is that nominal data may be used to create groups for parametric assessments, but the variables that are being used in those analyses must be scale data. Another assumption of parametric tests is homogeneity of variances when working with multiple groups. Each group should have an equal chance of variability. If one group has different variance than another, this assumption is violated.

Fortunately, there are statistical tests that do not make these assumptions and they fall into the nonparametric category. For nearly every parametric statistical test, there is also a nonparametric substitute that can be run if the above assumptions are not met.

Parametric test assumptions of the data

- numerical in nature (scale or continuous)

- normality

- homogeneity of variance between groups

Nonparametric

Nonparametric statistical assessments do not make any assumptions on the normality of the data. These tests may also be called "distribution free" because they do not make assumptions based on the data distribution. This means that they can work with data that have outliers, are skewed, have high levels of kurtosis, have differing levels of variance, and/or violate normality in another way. They have the added benefit of also working with nominal and ordinal data types.

Why not always run nonparametric tests?

There are many benefits to running a nonparametric test, primarily that they do not have as many assumptions that need to be tested. This often leads many to the thought that they should exclusively use nonparametric tests. Unfortunately, nonparametric tests also have some weaknesses when compared to their parametric counterparts. First, nonparametric tests are often less statistically powerful when compared to parametric tests, when working with normal distributions.[3] This means that parametric tests will lead to less errors in decision making. Another disadvantage is that nonparametric tests may be harder to interpret. As will be discussed more in depth later on, nonparametric methods may use rankings of data instead of the raw data values. So, if a test shows there is a mean difference in ranks between the groups by 7 places, that isn't always that helpful since it isn't known how much difference there is between each rank (please review the ordinal data section of Chapter 2 for more on this topic).

Testing for Normality and Homogeneity

Please review Chapter 2 as the evaluation of normality was previously discussed and demonstrated there. Homogeneity of variance was previously discussed in Chapter 6. Examples of each test will be listed below, but the the most commonly used tests are the Shapiro-Wilk test for normality and the Levene's test for homogeneity of variances.

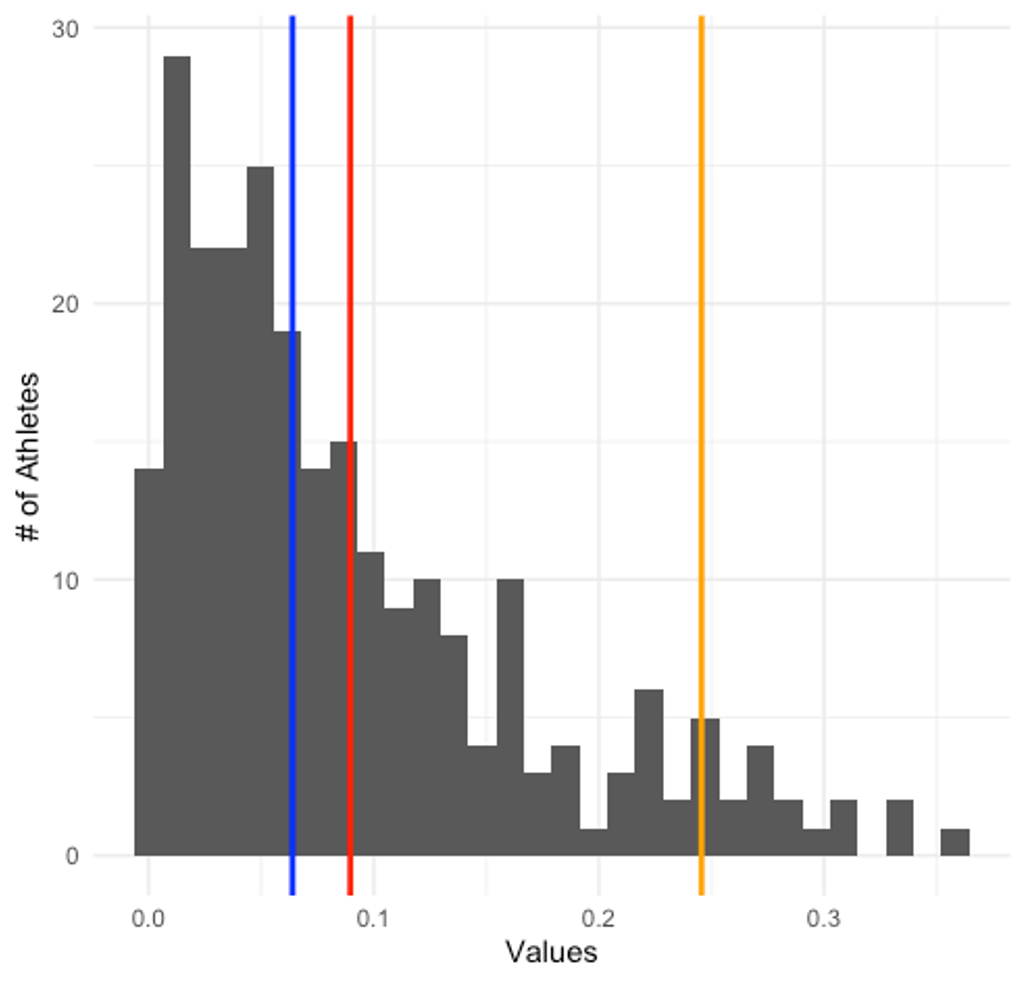

Visual inspection may be used as a first method of assessment for normality, but a statistical approach is always best. Unfortunately, MS Excel users may be limited to the visual approach as statistical tests of normality will be limited to skewness and kurtosis in Excel. In order to go further, one will need to use a program like JASP or something similar. The Shapiro-Wilk test has been shown to provide better statistical power then the Kolmogorov-Smirnov test, which may be the main reason it is often chosen.[4]

Tests of Normality

- Visual Inspection

- Shapiro-Wilk test

- Kolmogorov-Smirnov (K-S) test

When testing for homogeneity, the test selection should be made based upon the situation. Both Levene's and Bartlett's tests are suitable for working with multiple groups, but the Breush-Pagan test should be used when working with a single group and is most common in regression. When comparing multiple groups these tests are commonly referred to as tests of "homogeneity of variance" between the groups, but when evaluating the variance in regression, it is more often referred to as a test of homoscedasticity. You may recall from Chapter 6 that homosecedasticity occurs when the measure magnitude does not impact the chance of producing an error (variance). The opposite is true with heteroscedasticity, where very large or very small values are more likely to have more variability. It is likely that quite a lot of sport performance data are heteroscedastic[5].

Tests of Homogeneity of Variance

- Levene's test

- Barlett's test

- Breusch-Pagan test

While each test should always be selected based on the individual situations, this isn't always the case in reality. As mentioned previously, most published studies in Kinesiology will evaluate normality with a Shapiro-Wilk test and a Levene's test for evaluation of homogeneity of variance. More than likely, the true reason for this is that these are the most common assessments included in statistical software. This can be observed firsthand for JASP users following along in this book in the examples shown in the next section.

Nonparametric Tests

This section will demonstrate how to run and interpret several nonparametric statistical analyses these will include several alternatives to previously used parametric tests. In the interest of consistency, this section will use the same datasets previously used to run those tests to demonstrate how to quickly evaluate some of the assumptions listed above. Unfortunately, these tests cannot be completed in MS Excel. So, all of the examples will be shown in JASP only. Please use Table 8.1 as your guide for selecting the appropriate alternative to a specific parametric test.

| Parametric Test | Nonparametric Alternative |

|---|---|

| PPM correlation | Spearman correlation |

| independent samples t test | Mann-Whitney U |

| dependent (paired) samples t test | Wilcoxon signed ranks test |

| one-way ANOVA | Kruskal-Wallis ranks ANOVA |

| Tukey HSD Post Hoc (if indicated by ANOVA) | Dunn's Post Hoc Test (if indicated by ANOVA) |

Spearman correlation for PPM in JASP

The PPM correlation was introduced in Chapter 3 and you may recall from the JASP example that several of the variables were initially loaded in as ordinal data. We switched those to scale for the example in chapter 3, but that won't be necessary when running a nonparametric test, because it considers data ranked for the analysis. Here's a link to the data again.

This test is run similarly to the way it was done previously. First click on the Regression module and select the classical correlation option. Next, move all the variables you wish to include in the correlation matrix over to the variables box. You should now see the correlation matrix already built in the results section. But as you can see, these are Pearson's r values. This can be changed by checking the box next to "Spearman's rho" and unchecking the "Pearson's r" box that was checked by default. Now your results should appear similarly to Table 8.2 below. The interpretation of the findings is completed in the same way as the PPM r values and any other p value. For example, we would say that the Score is moderately related to Variable 2 with an r value of 0.410, but it is not statistically significant as the p value is above 0.05.

| Variable | Score | Variable 2 | Variable 3 | Variable 4 | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Score | Spearman's rho | — | |||||||||

| p-value | — | ||||||||||

| Variable 2 | Spearman's rho | 0.410 | — | ||||||||

| p-value | 0.240 | — | |||||||||

| Variable 3 | Spearman's rho | 0.110 | -0.418 | — | |||||||

| p-value | 0.762 | 0.232 | — | ||||||||

| Variable 4 | Spearman's rho | -0.015 | 0.426 | 0.097 | — | ||||||

| p-value | 0.966 | 0.220 | 0.789 | — | |||||||

Individual variable evaluations of normality should be completed in the same manner demonstrated previously in Chapter 2. There are options to evaluate the normality assumption in a multivariate manner within the same correlation analysis window if the "Assumption Checks" menu is expanded. But, univariate normality is a precondition of evaluating multivariate normality, which means it should be evaluated first.[6]

Mann-Whitney U for an independent samples t test in JASP

You may recall from Chapter 4 that we were working with NFL data looking for differences in the mean yards per game passing statistic of quarterbacks. Hopefully you still have that saved file, but it is linked here again if not. Recall that you will need to create the grouping variable demonstrated in Chapter 4.

Let's first look at a quick way to evaluate normality and homogeneity for the independent samples t test variables in JASP. The t test setup is the same for the independent samples t test and the Mann-Whitney U, so this won't add any extra work. Simply click the T-tests module and select the classical version of the independent samples t test. Now move the variable of interest (Y/G) into the variables box and move the grouping variable into the grouping variable box. You should see that the results are already being populated, but we need to evaluate our assumptions first. Under the "Assumption Checks" heading, check the boxes next to "Normality" and "Equality of variances." Now you should see tables similar to 8.3 and 8.4 in the Results section. Table 8.3 displays the results of the Shapiro-Wilk test and they can be interpreted in the same way as described in Chapter 2. Since the p value of each group is above 0.05, the data should be considered normally distributed, so the regular independent samples t test can be run. Table 8.4 displays the results of our test of homogeneity of variance. It can be interpreted similarly as the p value is above 0.05, meaning the assumption of homogeneity has not been violated. For future use, if only one of these assumptions (normality or homogeneity) is violated, a nonparametric option should be used. As has been shown, neither of these assumptions were violated in this dataset, but for the purposes of this example, we will ignore that and proceed as if the data were not normally distributed.

| W | p | ||||||

|---|---|---|---|---|---|---|---|

| Y/G | Older | 0.970 | 0.892 | ||||

| Recent | 0.971 | 0.901 | |||||

| Note. Significant results suggest a deviation from normality. | |||||||

| F | df | p | |||||

|---|---|---|---|---|---|---|---|

| Y/G | 0.319 | 1 | 0.579 | ||||

Switching to a Mann-Whitney U is completed by checking the box next to "Mann-Whitney" and unchecking the box next to "Student." The results should be similar to Table 8.5 below. The interpretation is the same as other t tests and the p value. It is less than 0.05, so it could be said that there is a statistical difference between the two decades in terms of passing yards per game. Note that the JASP still titles tables by the original selection, but provides a note below to indicate that the test run was actually the Mann-Whitney U.

| W | df | p | |||||

|---|---|---|---|---|---|---|---|

| Y/G | 0.000 | < .001</td> | |||||

| Note. Mann-Whitney U test. | |||||||

Wilcoxon signed ranks for a paired samples t test in JASP

Paired samples test are used when the same group is tested on multiple occasions. The nonparametric alternative for a dependent (paired) samples t test is the Wilcoxon signed ranks test. Back in Chapter 4, the paired example used theoretical data of NFL quaterback development again looking at passing yards per game. The dataset can be found here.

Again, the setup for the analysis is nearly identical to the way it was previously run. Much like the Mann-Whitney U above, the assumption of normality can be accomplished by checking the "Normality" box and it uses the Shapiro-Wilk as well. Again, we see that the p value is not statistically significant, so normality should be assumed, but we will ignore that for the purposes of this example and demonstrate the nonparametric test.

| W | p | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Pre 2010 | - | Post 2010 | 0.936 | 0.507 | |||||

| Note. Significant results suggest a deviation from normality. | |||||||||

Similarly to the t test already ran, this can be switched to a nonparametric alternative by deselecting "Student" and selecting "Wilcoxon signed-rank," which is the only other option available. The results are displayed in Table 8.7, where a statistically significant difference is observed (p ≤ 0.05). Again, the note below the table shows that the Wilcoxon was run instead of the paired samples t test.

| Measure 1 | Measure 2 | W | df | p | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|

| Pre 2010 | - | Post 2010 | 6.000 | 0.032 | |||||||

| Note. Wilcoxon signed-rank test. | |||||||||||

Kurskal-Wallis ranks ANOVA for a one-way ANOVA in JASP

Much like all the examples before, the setup for the nonparametric alternative for the ANOVA is identical. In the previous example we were looking at training methods and a strength measure. The dataset can be found here. After opening up the data in JASP, select the ANOVA module and the classical version of the ANOVA. Move the dependent and grouping variables over and the results should begin populating.

Again, some assumptions can be checked here. Homogeneity can be evaluated by checking the box, but normality must be checked earlier by the univariate method demonstrated in Chapter 2.

Similar to before, this example will pretend that the data are not normally distributed. Switching to a nonparametric alternative is a little different for ANOVAs, but it is equally easy. Simply scoll down and expand the "Nonparametrics" menu. Move the grouping variable over (Training_method) and then the results of the Kruskal-Wallis test should populate the results section under the original ANOVA that was run (Table 8.8 below). The p value of

| Factor | Statistic | df | p | ||||

|---|---|---|---|---|---|---|---|

| Training_Method | 16.705 | 2 | < .001</td> | ||||

Hopefully you recall that we aren't done yet. An ANOVA is used for comparison of 3 or more groups, and establishing a statistical difference between the groups isn't enough. A post-hoc test must be run to identify where the statistical difference is. Since the data are not normally distributed, the Tukey HSD should be replaced by a nonparametric alternative. The Dunn test is the nonparametric post hoc test to replace the Tukey HSD. This can be completed by expanding the "Post Hoc Tests" menu, moving the grouping variable over and checking the box next to Dunn. The results should now be displayed. The standard Tukey table will still appear first and you may need to scroll down to see the Dunn's post-hoc analysis results. These appear in Table 8.9 below. Note that p value adjustments also appear since multiple comparisons were run. These will be discussed further in Chapter 9. Whenever multiple comparisons are run, there is an increase in the chance for a type I error, so these adjustments are often used to reduce that risk.

| Comparison | z | W i | W j | p | p bonf | p holm | |||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| bodyweight - free_weights | -4.040 | 6.850 | 22.750 | < .001</td> | < .001</td> | < .001</td> | |||||||

| bodyweight - machines | -2.554 | 6.850 | 16.900 | 0.005 | 0.016 | 0.011 | |||||||

| free_weights - machines | 1.487 | 22.750 | 16.900 | 0.069 | 0.206 | 0.069 | |||||||

Using the Bonferroni adjusted p values (P bonf in Table 8.9), it is evident that there is a statistical difference between using bodyweigt and free weight methods of strength training (p < 0.001). Similarly, there is a statistical difference between bodyweight and machines (p = 0.016). This should make some sense as there is a limited amount of overload that can be applied by only using bodyweight.

There are quite a few other nonparametric statistical tests that can be run that are not included in this chapter. This chapter sought to introduce some of the more commonly used assessments that can serve as alternatives to those previously discussed, but does not serve as an exhaustive list of all nonparametric tests. As always, test selection should be completed based on specific individual scenarios and it is possible that a scenario may require a more complicated statistical test or one that is different from those shown above. Fortunately, there are many free resources on the internet where these tests can be quickly learned.

- Vincent, W., Weir, J. 2012. Statistics in Kinesiology. 4th ed. Human Kinetics, Champaign, IL. ↵

- Tabachnick, B., Fidell, L. (2019). Using Multivariate Statistics. 7th edition. Pearson: Boston, MA, USA. ↵

- Frost, J. (2020). Hypothesis Testing. Statistics by Jim Publishing. State College, PA, USA. ↵

- Ghasemi, A., & Zahediasl, S. (2012). Normality tests for statistical analysis: a guide for non-statisticians. International journal of endocrinology and metabolism, 10(2), 486–489. https://doi.org/10.5812/ijem.3505 ↵

- Bailey, C. (2019). Longitudinal Monitoring of Athletes: Statistical Issues and Best Practices. J Sci Sport Exerc.1:217-227. ↵

- Gnandesikan, R. (1977). Methods for statistical analysis of multivariate observations. Wiley: New York, NY, USA. ↵